- #Install apache spark on windows install#

- #Install apache spark on windows download#

- #Install apache spark on windows windows#

#Install apache spark on windows install#

Install the Scala IDE from – org/download/sdk.html.A good starting point is (be sure to install Spark 3.0.0 or 2.4.4 depending on which version of the course you’re taking) Install Java 8, Scala, and Spark according to the particulars of your specific OS.cd to the directory apache-spark was installed to and then ls to get a directory listing.Install the Scala IDE from Step 3: Test it out! Step by step instructions are at Step 2: Install the Scala IDE Method B: Using HomebrewĪn alternative on MacOS is using a tool called Homebrew to install Java, Scala, and Spark – but first you need to install Homebrew itself. Spark 2.3.0 is no longer available, but the same process should work with 2.4.4 or 3.0.0.

The best setup instructions for Spark on MacOS are at the following link: MacOS Step 1: Install Spark Method A: By Hand

Hit control-D to exit the spark shell, and close the console window.You should get a count of the number of lines in that file! Congratulations, you just ran your first Spark program!.Enter val rdd = sc.textFile(“README.md”) (or whatever text file you’ve found) Enter rdd.count().At this point you should have a scala> prompt.Look for a text file we can play with, like README.md or CHANGES.txt.Enter cd c:\spark and then dir to get a directory listing.

#Install apache spark on windows windows#

Open up a Windows command prompt in administrator mode. Close the environment variable screen and the control panels. Add the following paths to your PATH user variable:. JAVA_HOME (the path you installed the JDK to in step 1, for example C:\ProgramFiles\Java\jdk1.8.0_101). Click on “Advanced System Settings” and then the “Environment Variables” button. Right-click your Windows menu, select Control Panel, System and Security, and then System. Edit this file (using Wordpad or something similar) and change the error level from INFO to ERROR for log4j.rootCategory Open the the c:\spark\conf folder, and make sure “File Name Extensions” is checked in the “view” tab of Windows Explorer. Create a c:\tmp\hive directory, and cd into c:\winutils\bin, and run winutils.exe chmod 777 c:\tmp\hive. If you are on a 32-bit version of Windows, you’ll need to search for a 32-bit build of winutils.exe for Hadoop.)

#Install apache spark on windows download#

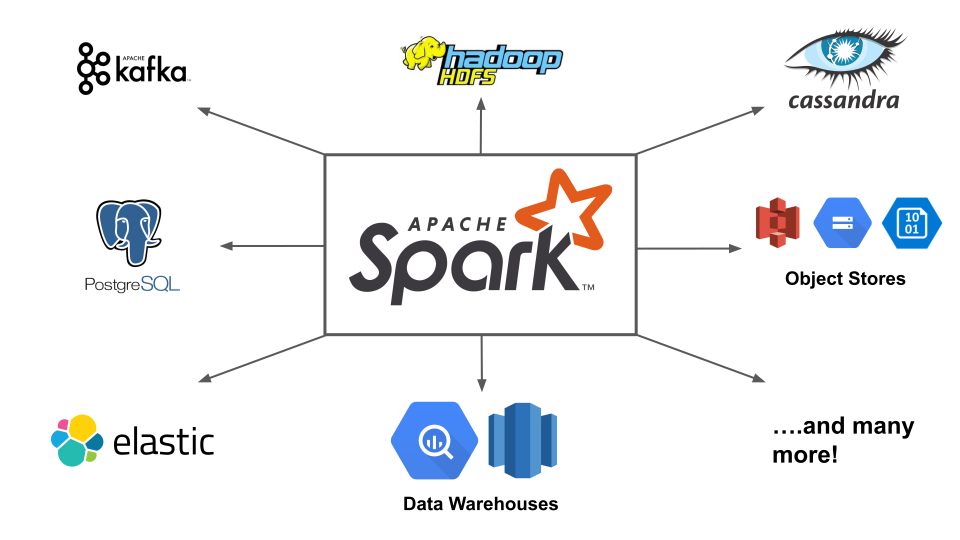

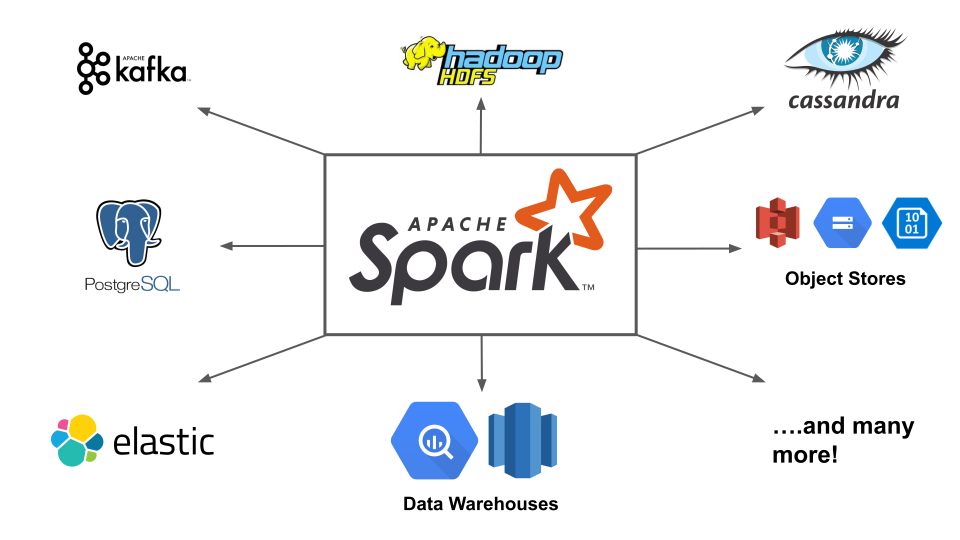

Download winutils.exe from – s3./winutils.exe and move it into a C:\winutils\bin folder that you’ve created. You should end up with directories like c:\spark\bin, c:\spark\conf, etc. Extract the Spark archive, and copy its contents into C:\spark after creating that directory. If necessary, download and install WinRAR so you can extract the. Download a pre-built version of Apache Spark 3.0.0 or 2.4.4 depending on which version of the course you’re taking from. Don’t accept the default path that goes into “Program Files” on Windows, as that has a space. And BE SURE TO INSTALL JAVA TO A PATH WITH NO SPACES IN IT. Spark is not compatible with Java 9 or greater. DO NOT INSTALL JAVA 9, 10, or 11 – INSTALL JAVA 8. Keep track of where you installed the JDK you’ll need that later. Install a JDK (Java Development Kit) from. Installing Apache Spark and Scala Windows: (keep scrolling for MacOS and Linux) – you’ll need this in the second video lecture of the course. If you’re using the older version of this course for Apache Spark 2, use this package instead: The scripts and data for the Spark 3 version of this course may be downloaded at (We have discontinued our Facebook group due to abuse.) Course Materials

0 kommentar(er)

0 kommentar(er)